Solving a facility location problem in near-linear time

0. Problem Description

Given a list of household locations and candidate facility locations . Your task is to place hospitals, i.e. choose a subset , such that is minimized. In other words, if we add up the minimum distance to a household to any of the chosen hospitals, the sum should be the minimum possible.

I. Basic solution

One key observation is that people going to the same hospital must live in neighbouring locations. The proof is as follows:

If someone on your left and someone on your right are both choosing the same hospital , you should go to the same one as well. If you go to any hospital that is left of , it would be better for that person on your left to go to hospital as well. Similarly for going to a hospital that is right of .

Therefore, we can formulate this problem as partitioning the people into (at most) contiguous sections, then assigning a hospital to each section, so that the total distance is minimized.

With this in mind, we define the dp states as follows:

Definition 1 (DP states):

Let be the minimum total distance for people to , if we assign them to the same section (same hospital).

Let be the minimum total distance for people to , if we assign them to sections.

The decision for would be where to start the -th section (we know that it ends at ). Thus we arrive at the recurrence

If we implement this recurrence straightforwardly, the runtime would depend on the time needed to compute function. The baseline is per query: try all hospital locations and compute total cost in time. This leads to a solution with overall runtime .

Below we describe a few optimizations:

- Precomputation: We can precompute for all and , to avoid repeated computations in the dp recurrence.

- Prefix sum/partial sum: We can compute the total distance to a specific hospital (say at ) for people to in time, using prefix sum. The idea is that there is a break-off point so that people to are to the left of and people to are to the right of . Then, the sum becomes , which can be computed in with a prefix sum on the array and if can be found in (how?).

- Median: for a contiguous section of people , if we can build a hospital freely, then choosing a median of the locations would be optimal (proof left as exercise). By extension, we should choose either the location that is immediately to the left of the median, or immediately to the right of the median. We don’t need to consider all candidate locations.

Using either of the observations, the overall runtime can be reduced to quartic, e.g. using (1). By combining at least two of the three observations, you can get the overall runtime down to cubic or nearly cubic, e.g. by combining (1) and (2).

II. Divide and conquer…?

It turns out that using an advanced optimization technique, called the “Divide and Conquer optimization” in the competitive programming community, we can obtain a solution with runtime , i.e. nearly quadratic!

First, precompute for all in time. This can be done by combining all the three optimizations.

Now, on to the DP part. We will fill the DP table row by row. The goal is to compute given in time. Since we need to do this times, the overall runtime (taking into account the precomputation) will be .

The idea here is to use an additional solution structure: monotonicity of the optimal subproblem. Let me first present formally:

Proposition 2 (monotonicity of optimal subproblem)

Let be the smallest index , such that is minimized. Then, .

In other words, if it is optimal to group people in an -group arrangement, and if it is optimal to group people in an -group arrangement, then . The key element of the proof is that the cost function satisfies the quadrangle inequality: for , . The proof is not very easy. If you want to check your proof or know how it’s done, please reach out to me.

With this, we can prove the monotonicity of optimal subproblem. Suppose on the contrary that for some . By definition of we have:

Adding up the two, and cancelling common terms on both sides of the inequality, we get . Taking , this becomes , which is a violation of the quadrangle inequality.

Let me give you an idea of why this property would help. Short answer: to reduce search space.

Without this monotonicity property, one would need to go through subproblems to calculate . But suppose you know that . Then we only need to go through to calculate .

There is one small issue: if turns out to be really small, then it appears to be ineffective in narrowing the search range. However, notice that we can use it to narrow the search range for : we only need to go through to calculate !

To calculate , let , and let . Then, to calculate we only need to look at subproblems , and to calculate we only need to look at subproblems . We do this recursively to further narrow the search range — divide and conquer :)

For the implementation, you can refer to Slides 67-72 of this presentation (external link) which I made a few years back. The slides use instead of .

III. Faster than quadratic

This is not the end of the story. In fact we can solve the problem in time !! If you turn a blind eye to those logs, there is only one lonely term left… (Why is this surprising? Well, even the number of states of the DP is quadratic, so how can the runtime be less than that?)

This magic trick is called the “Aliens trick” in competitive programming community. It is useful for eliminating one of your DP state dimensions. In the context of this problem, the idea is as follows:

- Instead of keeping track of number of sections (= number of hospitals) so far, assign a penalty to each section (i.e. it costs to build a hospital), and hope that in the end the number of sections will be equal to .

So, let be the minimum total cost for people to , if we assign them into any number of sections, but each section increases the cost by . Then,

Claim 1: We can compute in time

Claim 2: We can find a suitable , by computing for different values of . is the range of the input numbers

For claim 1, let me refer you to this tutorial (external link). Again, the algorithm works because the cost function satisfies the quadrangle inequality. The quadrangle inequality means that, once subproblem is dominated by some later subproblem (i.e. for some ), it will not be optimal anymore (i.e. for all ). The method in the tutorial essentially looks to maintain the set of “active” subproblems.

We need to compute the cost function times, each computation taking time (combining optimizations 2 and 3; note that it is too slow to precompute the cost functions!). The runtime is therefore .

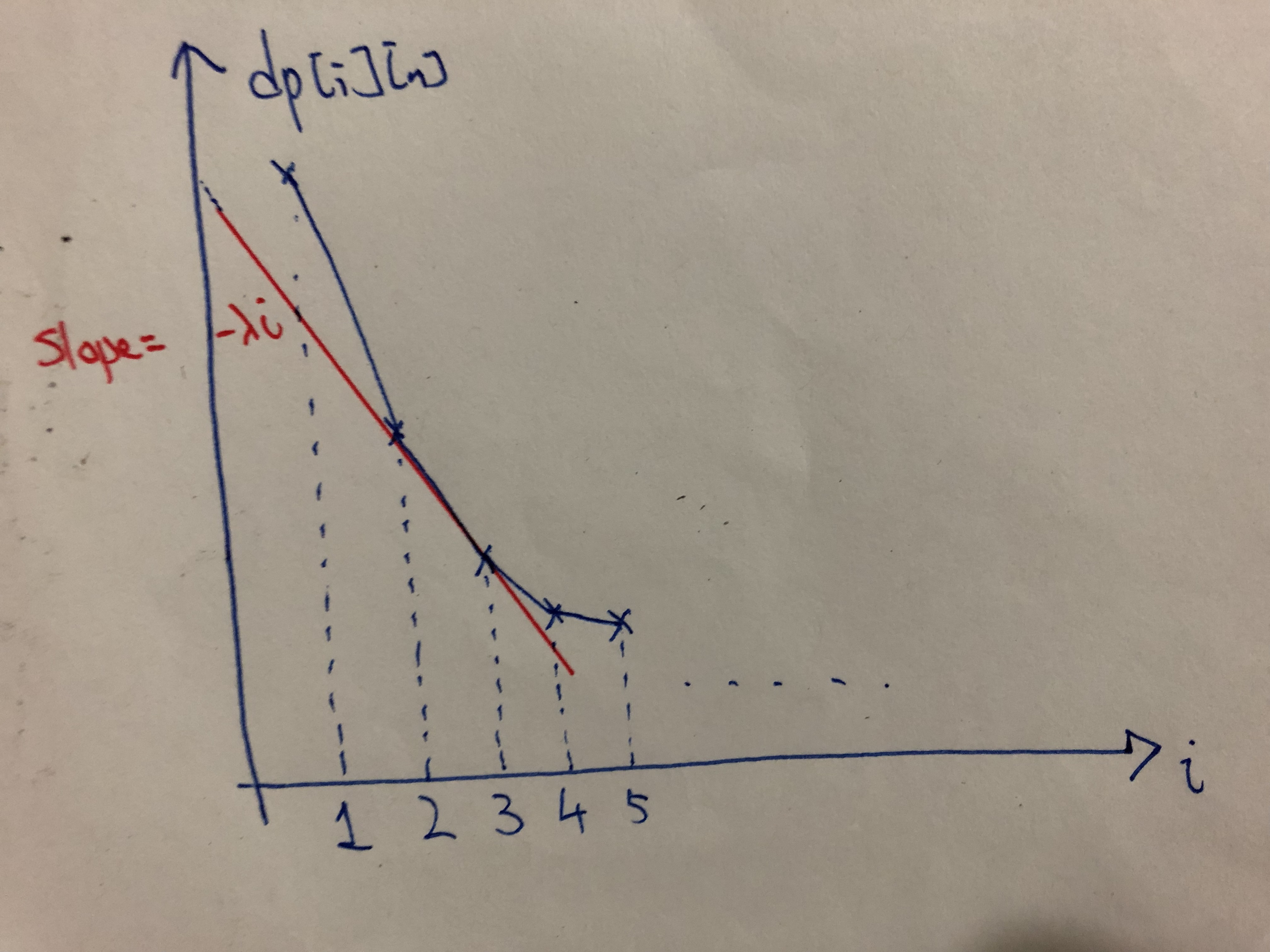

For claim 2, refer to this diagram below:

Varying is akin to varying the slope of the red line, and the point that is “closest” to the line corresponds to the optimal number of hospitals . Let’s consider extremal examples: when is very big, the cost of building a hospital is high, so it should be optimal to have . When is zero, there is no cost to build a hospital, so it should be optimal to have (build hospitals at all candidate locations).

If the function is convex (i.e. the graph above is convex), then by varying we can make all different values of optimal (or one of the optimal), and we can binary search on to find the so that building hospitals is optimal. Binary search would take iterations (note that we only need to check integer values of ), and in each iteration we would compute for some .

To check convexity of , check that . The idea is that, given an optimal partition into groups and an optimal partition into groups, we can construct two partitions and , both into groups, so that their sum of costs is at most the sum of costs of and (this step uses the quadrangle inequality).

Therefore, we have an solution to the facility location problem.

IV. Afterword

Dynamic programming is a powerful algorithm paradigm. This course has shown you the basic examples and ideas that help demystify DP and turn it from magic to craft, something that you can reliably use to tackle problems. Hopefully, this writeup has given you a glimpse of the beautiful ideas and techniques that DP incorporate, to show that DP is not just a craft, but also an art.